Whose data is worth more?

A tale of two data vendors

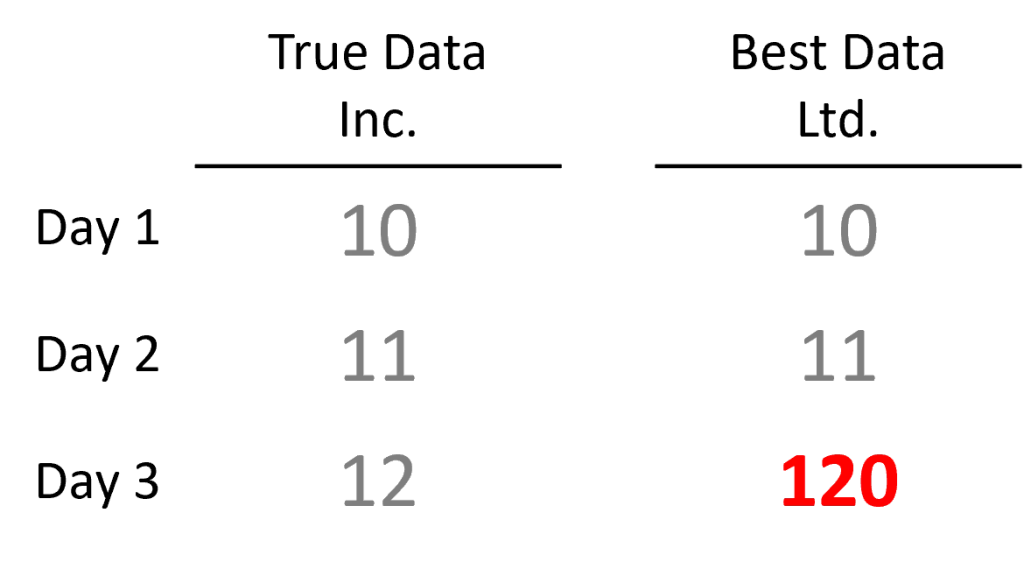

Imagine two companies go into business selling data to hedge funds. Both analyze satellite imagery to count how many cars come to a particular Walmart each day.

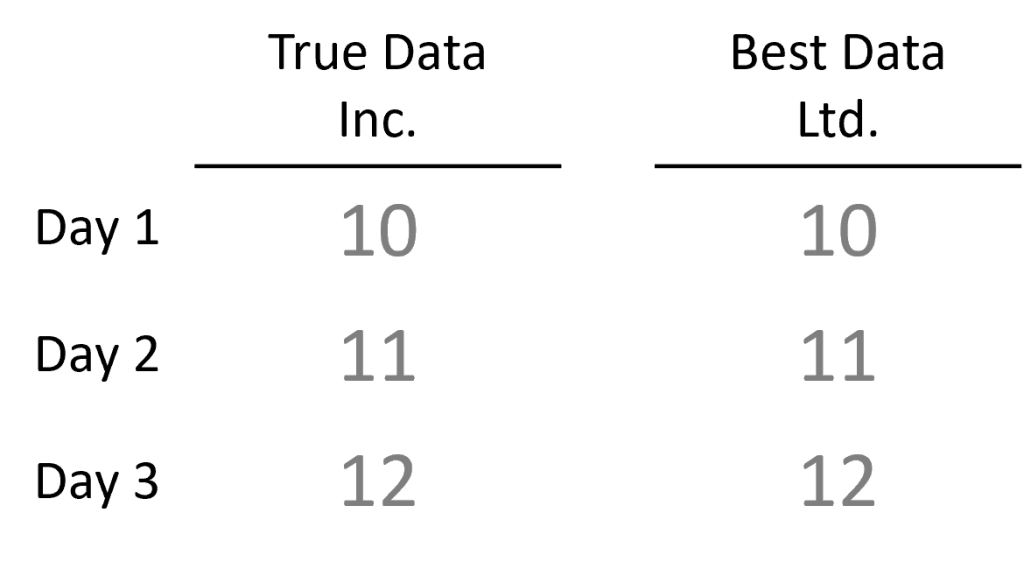

After the first 3 days, their databases show the following:

A few days later, a customer contacts Best Data Ltd. informing them that their day 3 observation is impossible because the Walmart parking lot only has 50 parking spots. Best Data Ltd. dutifully checks, realizes a typo was made, and updates its database to reflect the correct data.

Thus, on day 7, the database for each company looks like this

A few months later, both companies start marketing

When a hedge fund considers whose data to buy, they run a backtest on each vendor’s historical data. The backtests look great (of course). But in the case of Best Data Ltd, the backtest would look much worse if the day 3 typo was in the historical data.

How does a hedge fund figure out which of the two companies has valuable data? How much is the good data worth? How valuable is it to avoid the bad data?

The underlying problem is adverse selection and a market for lemons. The presence of bad data in the market makes data generally less valuable, and makes data transactions less likely.

Imagine there were 99 companies like Best Data Ltd., with hidden typos in their historical data, and 1 company like True Data Inc. A hedge fund would have to incur the expense of researching a huge number of vendors before finding the one with profitable data.

Data due diligence is a non-trivial process, with significant expense and uncertainty. While diligence and trials are taking place, neither the vendor nor the fund can monetize the data. Valuable data sometimes degrades quickly, so time is of the essence.

Even after conducting research, a fund may choose to exit the market to avoid the risk of accidentally trusting the wrong data.

What about True Data Inc, the data vendor with the great data? They did all the right things, they made sure their data was high quality with no transcription errors. They invested in their data. But they still lost the sale.

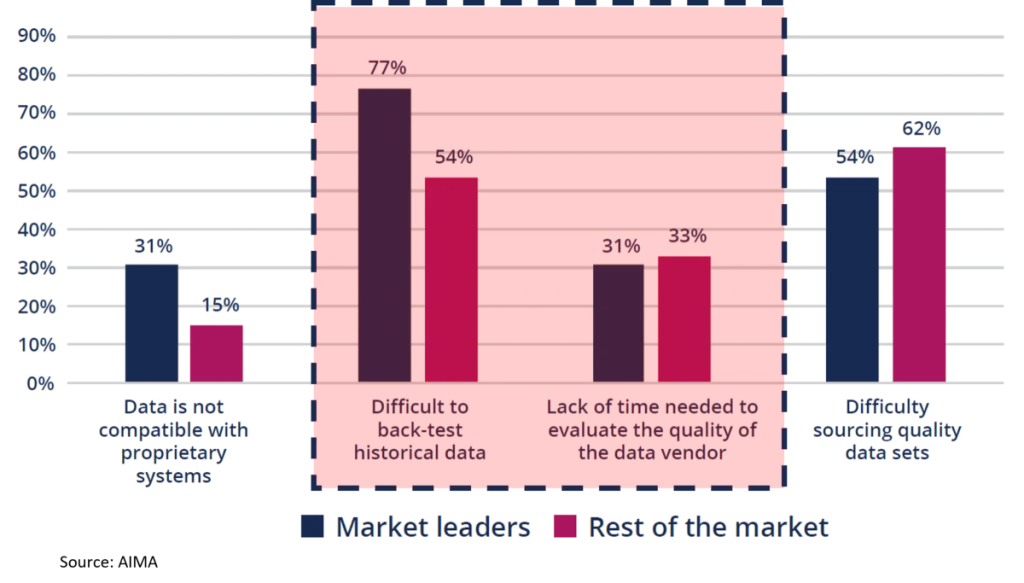

It’s no accident that funds say running reliable backtests is their number one problem with alternative data.

How vBase solves this problem

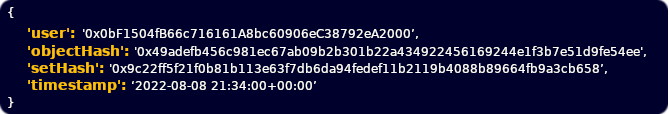

vBase allows both data companies to make auditable point-in-time cryptographic commitments of their data. Here is what a vStamp looks like:

The objectHash is a unique content identifier mapping the underlying database to a 256-bit hash value. The timestamp is assigned via an incorruptible consensus based process. The stamp data itself is stored on an immutable append-only public ledger.

The vStamps live on the Polygon network, a public blockchain (more networks coming soon). On their own the stamps are meaningless encrypted data. However, when paired with the underlying digital records, the vStamps can mathematically prove when a particular data record came into existence and who stamped it.

In the example of True Data and Best Data, both companies would use vBase to create provenance stamps for their database each day. The resulting set of vStamps would look like this:

The vStamps create a fully auditable bitemporal database, which allows each vendor to communicate the hidden quality of its data. Since there would be no way for Best Data Ltd. to create the same set of vStamps as True Data Inc., a potential customer could immediately determine which was which.

Moreover, the bitemporal vStamp record would allow a potential customer to run backtests over provably point-in-time data, assuring full time-safety of the underlying input data and the backtest simulation.

Because the vStamps sit on a public distributed ledger, they are verifiable over long time periods and they can be verified independently of vBase. Even if vBase disappeared, new vStamps could continue to be made and existing vStamps could continue to be validated.

Want to learn more?

Contact hello@vbase.com for more information

Recent Posts

Why Great Returns Don’t Attract Investors

Why Great Returns Don’t Attract Investors

Many investors are unable to convert strong returns into clients or capital. Learn why and how to fix it.

Mitigating BorgBackup Client Compromise

Mitigating BorgBackup Client Compromise

A compromised BorgBackup client allows undetectable tampering with past backups. vBase offers a simple solution.

Stop Using Brokerage Statements to Show Your Track Record

Stop Using Brokerage Statements to Show Your Track Record

Many traders use brokerage statements to create a verifiable track record. Unfortunately they are not credible for this purpose. A better alternative exists.